OpenAI established a super intelligent AI control group but then neglected it

13:36 19/05/2024

2 phút đọc

A research team at OpenAI, whose mission is to find ways to control “super-intelligent” AI systems, has not received enough computing resources needed to do their work. This, along with other disagreements, caused several key team members to quit, including team leader Jan Leike.

Leike believes that OpenAI should focus more on ensuring the safety of the next generations of AI. He is concerned that OpenAI is prioritizing new product launches over addressing important safety issues.

OpenAI did not immediately respond to the issue of resources promised and allocated to this research group.

The research team was founded last July with the ambitious goal of solving the core technical challenges of controlling super-intelligent AI over the next four years. However, as new product launches took up more of OpenAI leadership’s attention, the research team had to fight for more resources.

Over the past few months my team has been sailing against the wind. Sometimes we were struggling for compute and it was getting harder and harder to get this crucial research done.

— Jan Leike (@janleike) May 17, 2024

Although the group published several safety studies and funded millions of dollars in grants to outside researchers, they felt sidelined.

Leike warns that building machines that are smarter than humans is a potentially risky endeavor. He believes that over the years, safety culture has been overlooked in favor of flashy products.

We’re really grateful to Jan for everything he's done for OpenAI, and we know he'll continue to contribute to the mission from outside. In light of the questions his departure has raised, we wanted to explain a bit about how we think about our overall strategy.

First, we have… https://t.co/djlcqEiLLN

— Greg Brockman (@gdb) May 18, 2024

Disagreements between OpenAI co-founder Ilya Sutskever and CEO Sam Altman further complicate the situation. Sutskever once asked for Altman to be fired because he was concerned that the CEO had not been honest with the board. However, under pressure from investors and employees, Altman was reinstated, and Sutskever did not return to work.

After Leike quit, Altman admitted there was still much work to be done and promised to deal with it. However, specific commitments are still unclear.

OpenAI, instead of maintaining a separate team, will disperse safety researchers into different departments. This makes many people worry that OpenAI’s AI development will not be as safe as before.

Bài viết liên quan

Palm Mini 2 Ultra: Máy tính bảng mini cho game thủ

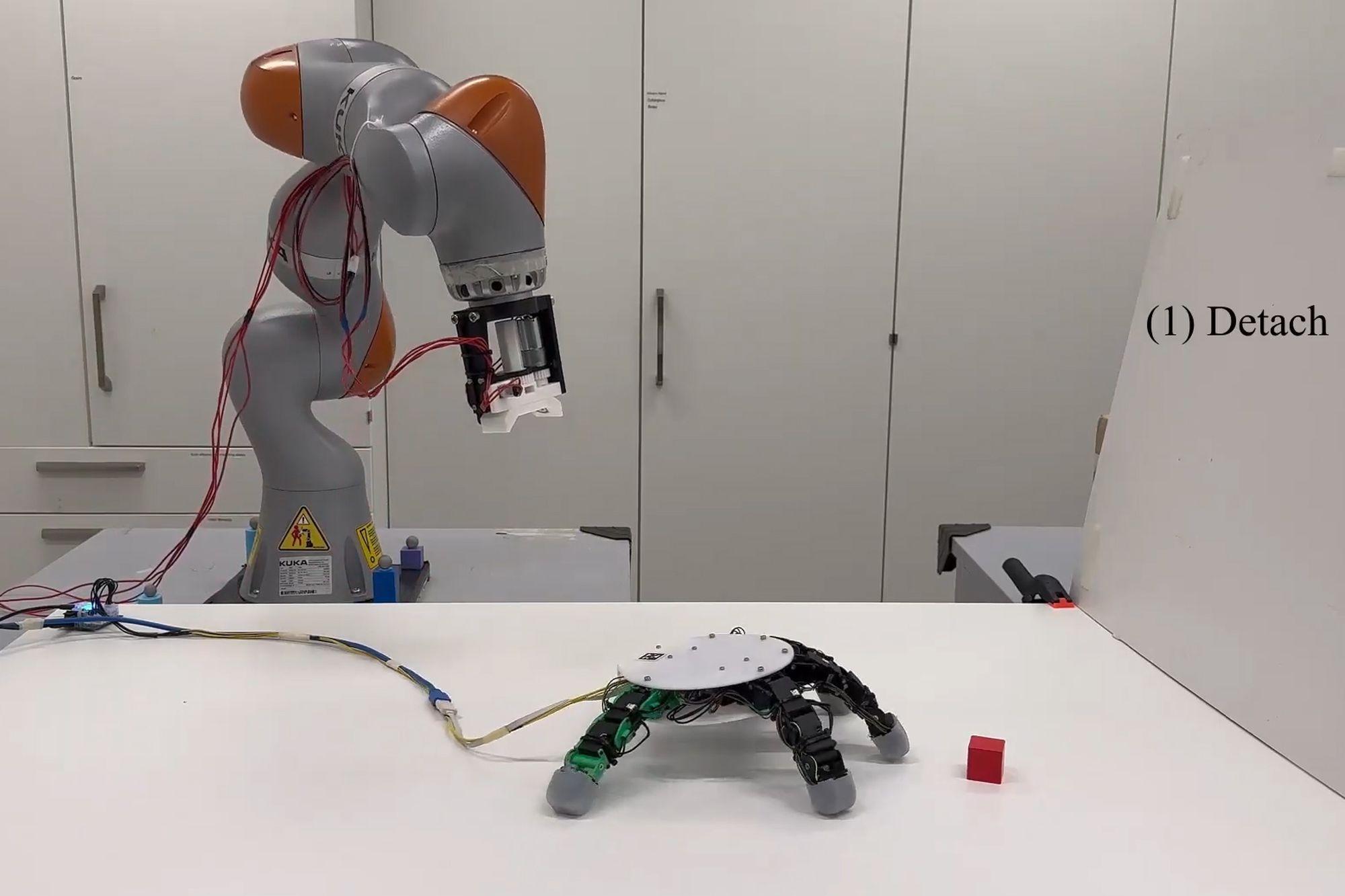

Robot with smart grip

NASA’s goal of conquering the Sun

Apple launches a new feature that makes it easier to use your phone while sitting on vehicle

Google Photos launches smart search feature “Ask for photos”

Roku streams live MLB baseball games for free

Gun detection AI technology company uses Disney to successfully persuade New York

Hackers claim to have collected 49 million Dell customer addresses before the company discovered the breach

Thai food delivery app Line Man Wongnai plans to IPO in Thailand and the US in 2025

Google pioneered the development of the first social networking application for Android

AI outperforms humans in gaming: Altera receives investment from Eric Schmidt

TikTok automatically labels AI content from platforms like DALL·E 3

Dell’s data was hacked, revealing customers’ home address information

Cracking passwords using Brute Force takes more time, but don’t rejoice!

US lawsuit against Apple: What will happen to iPhone and Android?

The UAE will likely help fund OpenAI’s self-produced chips

AI-composed blues music lacks human flair and rhythm

iOS 17: iPhone is safer with anti-theft feature

Samsung launches 2024 OLED TV with the highlight of breakthrough anti-glare technology

REGISTER

TODAY

Sign up to get the inside scoop on today's biggest stories in markets, technology delivered daily.

By clicking “Sign Up”, you accept our Terms of Service and Privacy Policy. You can opt out at any time.

Nhận xét (0)