WitnessAI develops protection systems for generative AI models

10:43 22/05/2024

3 phút đọc

Artificial intelligence (AI) technology has the ability to create new content, but also has many potential risks such as bias and distribution of malicious text. So how to ensure safety when using them?

Rick Caccia, CEO of WitnessAI , believes it is possible.

“Securing AI models is a real issue, for AI researchers it’s very important, but safety of use is another issue,” Caccia said. “I liken it to a computer. sports car: having a powerful engine (e.g. AI model) doesn’t help if you don’t have good brakes and steering. Control is as important as speed.”

Businesses are very interested in these control solutions, because the potential to increase productivity of text generation AI is attractive but also comes with many technological limitations.

An IBM poll found that 51% of executives are hiring for text-generating AI-related positions, which didn’t even exist last year. However, according to Riskonnect survey, only 9% of companies said they were prepared to manage threats – including those related to privacy and intellectual property – that arise from the use of bicultural AI. copy.

WitnessAI’s platform acts as an intermediary, intervening in interactions between employees and the text-generating AI models used by the company (not API-protected models like OpenAI’s GPT-4 , which is similar to Meta’s Llama 3). The platform will apply policies and protections to minimize risks.

“One of the promises of enterprise AI is that it helps unlock and democratize enterprise data for employees so they can work better. But it’s hard to unlock too much sensitive data – or leak it.” or stolen – would be a problem.”

WitnessAI provides access to several modules, each focused on addressing a type of text generation AI risk. For example, one module allows organizations to deploy rules to prevent employees from specific groups from using AI-powered text-generating tools in unauthorized ways (such as asking about reports). pre-release earnings report or paste internal source code). Another module removes proprietary and sensitive information from requests to the model and implements techniques to protect the model against attacks that could force it to malfunction.

“We think the best way to help businesses is to define the problem in a logical way – for example, the safe adoption of AI – and then sell a solution that solves the problem,” Caccia said. Chief information security officers (CISOs) want to protect businesses, and WitnessAI helps them do that by ensuring data protection, preventing request tampering, and enforcing identity-based policies. Chief information security officers (CPOs) want to ensure that current – and upcoming – regulations are followed, and we provide them with the ability to monitor and report on activity and risk. “

However, WitnessAI also poses a privacy dilemma: All data must pass through their platform before reaching the model. While the company is transparent about this, it even provides tools to track which models employees access, the questions they ask of the models, and the responses they receive. But it can also create other privacy risks.

Mr. Caccia affirmed that their platform is specifically designed and encrypted to ensure customers’ confidential data is not leaked. The platform operates with millisecond latency and has built-in regulatory separation. This means that each business’s artificial intelligence (AI) activities will be protected separately, completely different from software services under the SaaS model (serving many customers on the same platform). boulder).

He said WitnessAI creates a separate version of the platform for each customer, encrypted with their own key. As a result, the customer’s AI activity data is completely isolated – it is inaccessible to WitnessAI.

However, for employees concerned about the surveillance capabilities of the WitnessAI platform, the issue is more complicated. Surveys show that people generally dislike having their activities monitored at work, regardless of the reason, and believe that this negatively affects morale. Nearly a third of Forbes survey respondents said they might consider leaving their jobs if employers monitored their online activities and communications.

Despite this, Mr. Caccia confirmed that interest in the WitnessAI platform remains strong, with 25 companies in the testing phase. (The service will officially launch in the third quarter). Additionally, WitnessAI has raised $27.5 million from venture capital funds, demonstrating confidence in the platform’s potential.

This investment will be used to increase the size of WitnessAI’s team from 18 people to 40 people by the end of the year. Scaling is key for WitnessAI to overcome competitors in the emerging AI model governance and compliance solutions space, not only from tech giants like AWS, Google and Salesforce but also from other technology giants like AWS, Google and Salesforce. startups like CalypsoAI.

“We planned to operate until 2026 even if we didn’t sell any products,” Mr. Caccia said. “But currently, the number of potential customers is nearly 20 times our sales target for this year. This is our first round of funding and public launch, and enabling safe AI is a new frontier, all of our features are developed with this new market in mind. .”

Bài viết liên quan

Palm Mini 2 Ultra: Máy tính bảng mini cho game thủ

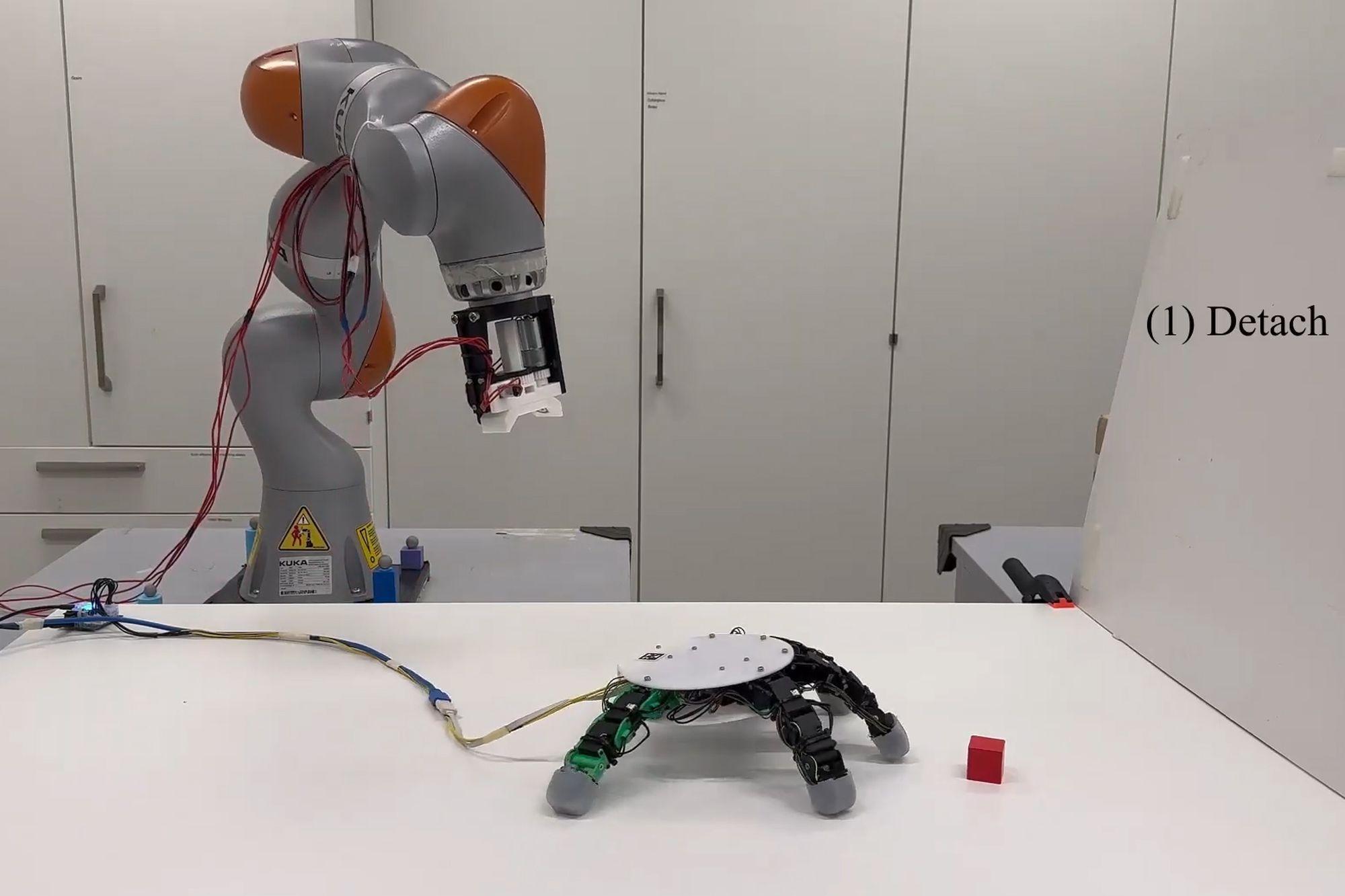

Robot with smart grip

NASA’s goal of conquering the Sun

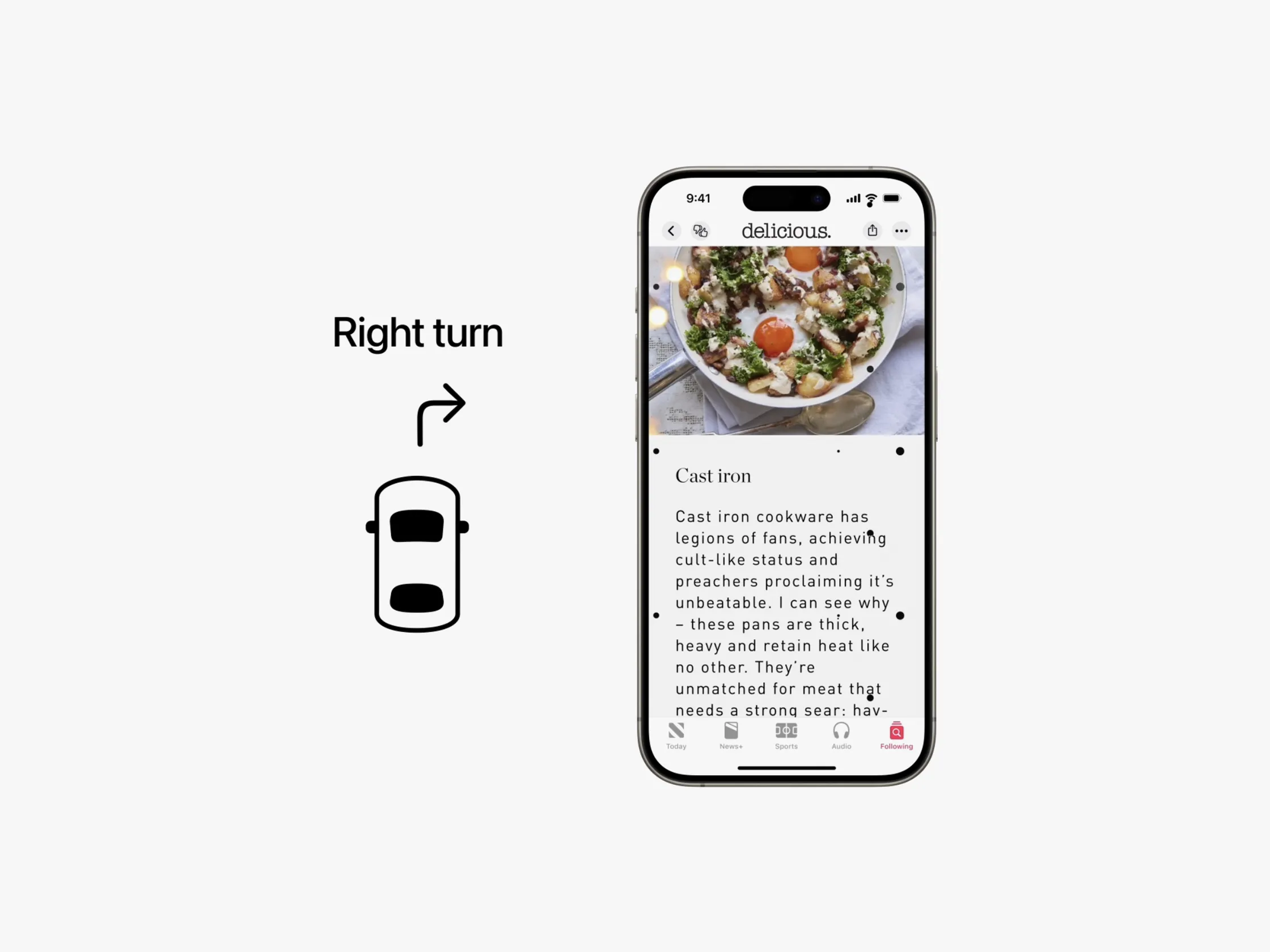

Apple launches a new feature that makes it easier to use your phone while sitting on vehicle

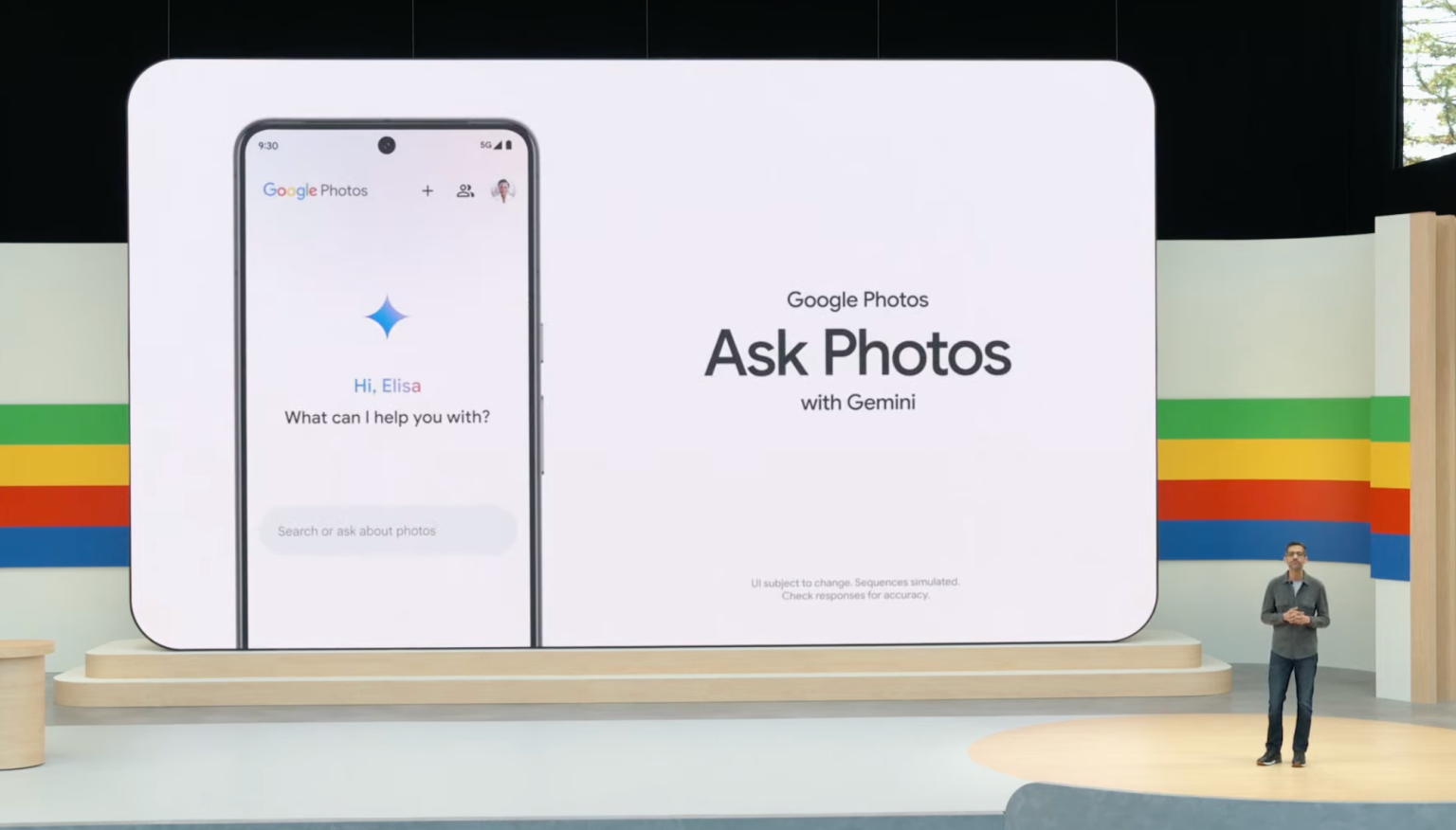

Google Photos launches smart search feature “Ask for photos”

Roku streams live MLB baseball games for free

Gun detection AI technology company uses Disney to successfully persuade New York

Hackers claim to have collected 49 million Dell customer addresses before the company discovered the breach

Thai food delivery app Line Man Wongnai plans to IPO in Thailand and the US in 2025

Google pioneered the development of the first social networking application for Android

AI outperforms humans in gaming: Altera receives investment from Eric Schmidt

TikTok automatically labels AI content from platforms like DALL·E 3

Dell’s data was hacked, revealing customers’ home address information

Cracking passwords using Brute Force takes more time, but don’t rejoice!

US lawsuit against Apple: What will happen to iPhone and Android?

The UAE will likely help fund OpenAI’s self-produced chips

AI-composed blues music lacks human flair and rhythm

iOS 17: iPhone is safer with anti-theft feature

Samsung launches 2024 OLED TV with the highlight of breakthrough anti-glare technology

REGISTER

TODAY

Sign up to get the inside scoop on today's biggest stories in markets, technology delivered daily.

By clicking “Sign Up”, you accept our Terms of Service and Privacy Policy. You can opt out at any time.

Nhận xét (0)