UK opens office in San Francisco to address AI risks

11:13 20/05/2024

3 phút đọc

The UK expands efforts to ensure artificial intelligence (AI) safety ahead of an AI safety summit taking place in Seoul, South Korea, this weekend.

The AI Safety Institute – a British organization founded in November 2023 with the goal of assessing and addressing risks in AI platforms – said it will open a second office in San Francisco, US .

The aim is to get closer to the current epicenter of AI development, with the Bay Area home to OpenAI, Anthropic, Google and Meta, among other companies building AI technology platforms.

Platform models are the foundation of general AI services and other applications, and it is interesting that although the UK has signed a Memorandum of Understanding (MOU) with the US for the two countries to cooperate on security initiatives, Despite AI, the UK is still choosing to invest in building a direct presence for itself in the US to solve this problem.

“Having employees in San Francisco will give them access to the headquarters of many of these AI companies,” Michelle Donelan, the UK’s Minister of Science, Innovation and Technology, said in an interview with TechCrunch. “Some of those companies are based in the UK, but we think it would be useful to have a base there, and have access to additional human resources and be able to work closely together than with the United States.”

Part of the reason is that for the UK, getting closer to that epicenter is not only useful for understanding what is being built but also gives the UK clearer visibility into these companies – which is important, because AI and technology in general are seen by the UK as a huge opportunity for economic growth and investment.

And with the latest developments at OpenAI surrounding their Superalignment team, establishing a presence there seems an especially opportune time.

The AI Safety Institute, founded in November 2023, is currently a relatively modest organization. Currently, the organization has just 32 people working, which is like David facing the Goliath of AI technology, when you consider the billions of dollars of investment pouring into companies that build AI models and, therefore, their own economic resources to bring their technologies to market and into the hands of paying users.

One of the most notable developments from the AI Safety Institute was the release of Inspect, their first toolkit for testing the safety of AI platform models, earlier this month.

Donelan today called that release a “phase one” effort. Not only has benchmarking models to date been a challenge, but currently, corporate participation is largely voluntary and inconsistent. As a senior source at the UK regulator points out, companies are currently under no legal obligation to have their models tested at this time; and not every company is willing to have models tested before release. That means, in case the risk can be identified, it is already too late.

Donelan said the AI Safety Institute is still developing the best way to work with AI companies to evaluate them. “Our assessment process is an emerging new science,” she said. “So with each review, we develop the process and refine it further.”

Donelan said one goal in Seoul is to present Inspect to managers attending the summit, with the aim of getting them to adopt it as well.

“We now have a review system. Phase two also needs to be aimed at ensuring AI safety for the whole of society,” she said.

In the longer term, Donelan believes the UK will legislate more on AI, although echoing what Chancellor Rishi Sunak has said on the subject, the UK will persist in not doing so until the scope is better understood. risks of AI.

The minister believes that the UK will develop more AI laws in the future. However, like Chancellor Rishi Sunak, she believes the risks of AI need to be better understood before legislation is introduced.

“We do not believe in hastily promulgating laws before we have comprehensive knowledge and understanding,” she explained. She mentioned the recent international AI safety report, which focused on building a comprehensive picture of AI research to date. The report “shows that there are many gaps in knowledge and that we need to encourage and promote more research globally.”

“In addition, the legislative process in the UK takes about a year. If we had started drafting legislation from the beginning instead of holding the AI Safety Summit (which took place last November), by now We are still in the drafting process but have not achieved any results yet.”

Mr. Ian Hogarth, president of the AI Safety Institute, said: “From its early days, the Institute has emphasized the importance of taking an international approach to AI safety, sharing research and collaborating with other countries to test advanced AI models and risk predictions.” “Today’s event marks a turning point that will allow us to further advance this agenda. We are proud to expand our operations in a region rich in technology talent, adding to our expertise Incredible staff in London from day one.”

Bài viết liên quan

Palm Mini 2 Ultra: Máy tính bảng mini cho game thủ

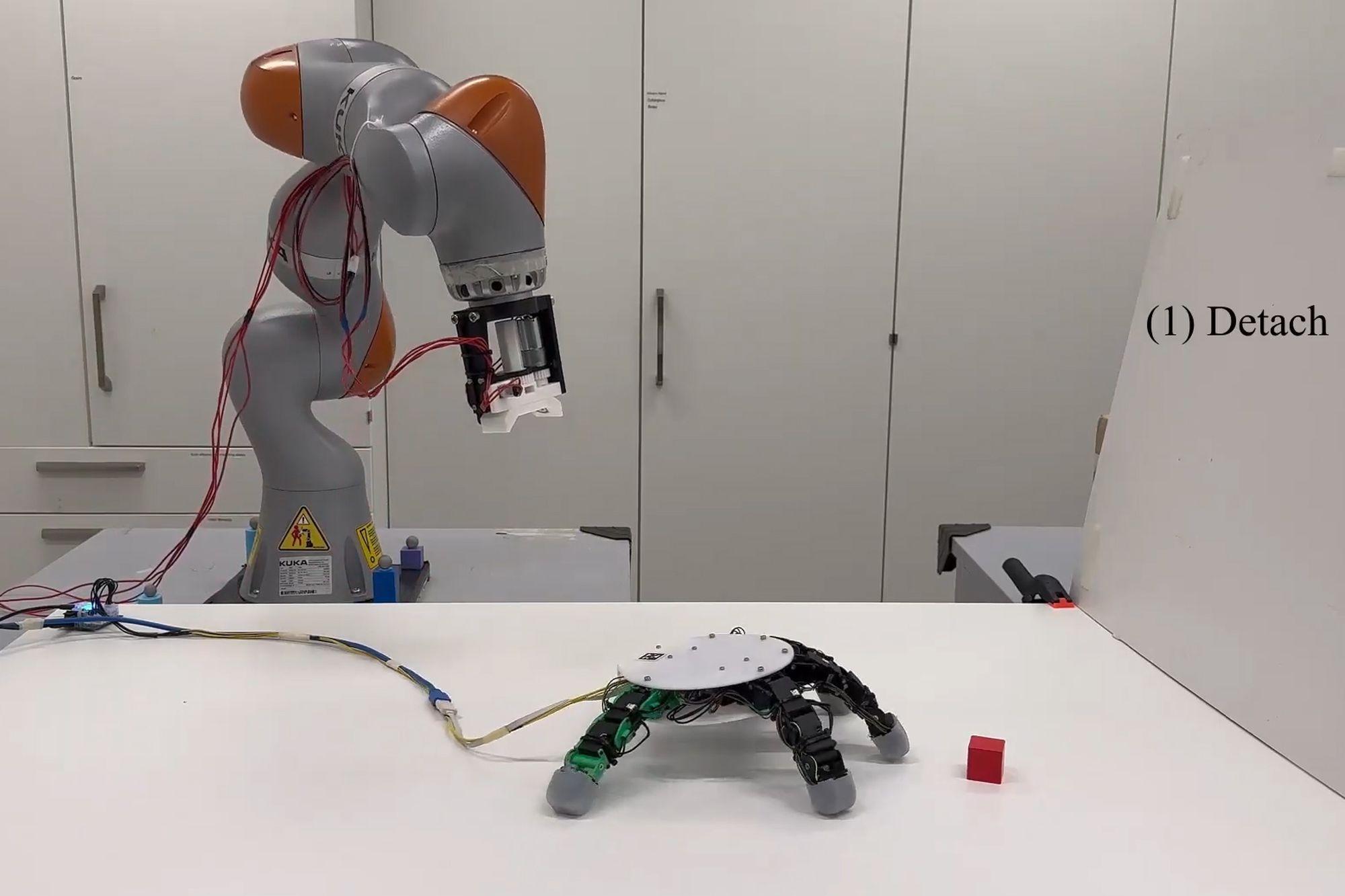

Robot with smart grip

NASA’s goal of conquering the Sun

Apple launches a new feature that makes it easier to use your phone while sitting on vehicle

Google Photos launches smart search feature “Ask for photos”

Roku streams live MLB baseball games for free

Gun detection AI technology company uses Disney to successfully persuade New York

Hackers claim to have collected 49 million Dell customer addresses before the company discovered the breach

Thai food delivery app Line Man Wongnai plans to IPO in Thailand and the US in 2025

Google pioneered the development of the first social networking application for Android

AI outperforms humans in gaming: Altera receives investment from Eric Schmidt

TikTok automatically labels AI content from platforms like DALL·E 3

Dell’s data was hacked, revealing customers’ home address information

Cracking passwords using Brute Force takes more time, but don’t rejoice!

US lawsuit against Apple: What will happen to iPhone and Android?

The UAE will likely help fund OpenAI’s self-produced chips

AI-composed blues music lacks human flair and rhythm

iOS 17: iPhone is safer with anti-theft feature

Samsung launches 2024 OLED TV with the highlight of breakthrough anti-glare technology

REGISTER

TODAY

Sign up to get the inside scoop on today's biggest stories in markets, technology delivered daily.

By clicking “Sign Up”, you accept our Terms of Service and Privacy Policy. You can opt out at any time.

Nhận xét (0)