Google was warned about the risk of censorship when it defaulted to scanning calls with AI

00:50 16/05/2024

3 phút đọc

According to the latest news, Google has just introduced a groundbreaking artificial intelligence (AI) application feature capable of analyzing live voice calls to detect signs of fraud. Thanks to this advanced AI technology, future Android users will be warned about suspicious calls to protect them from sophisticated scammers.

Image source: Google

This feature works by comparing call content with a huge database of conversation patterns commonly found in financial scams. Thanks to that, the AI system can identify unusual details, such as scammers using threatening, coercive language or enticing to reveal sensitive personal information. When detecting suspicious signs, the system will warn users so they can proactively take precautions and avoid falling into scam traps.

Although Google affirms that this new feature will bring many benefits to users, security and privacy experts have expressed concerns.

They are concerned that this feature could facilitate content censorship that takes place right on users’ devices. This can lead to limiting access to information and stifling freedom of expression.

The crux of the problem lies in “on-device scanning” – a new technology that has been controversial in recent years. Apple once tested scanning content on devices to search for child sexual abuse material (CSAM) but had to abandon it due to backlash from users.

According to Professor Matthew Green, a cryptography expert at Johns Hopkins University, using artificial intelligence (AI) models to monitor messages and voice calls to detect and report illegal behavior is entirely possible. coming out in the near future, just a few years from now.

Sharing concerns with experts in the United States, many cybersecurity experts in Europe also expressed concerns about Google’s new anti-phishing feature. They are concerned that this feature could be abused for social surveillance purposes, violating users’ privacy.

Lukasz Olejnik, an independent researcher on security and privacy in Poland, said that Google collects information about the websites users visit, the time they spend on those websites and the actions they take. can now lead to the creation of detailed profiles of users’ online behavior. This profile can then be used for social surveillance purposes, tracking political activities or even sold to third parties.

Google has unveiled an LLM model that, by monitoring phone calls, will be able to warn of suspected fraud. Great!

However, this also means that technical capabilities have already been, or are being developed to monitor calls, creation, writing texts or documents, for example… pic.twitter.com/TD1d3g8P2M

— Lukasz Olejnik (@lukOlejnik) May 15, 2024

According to his warning, tracking technology can be abused to police content that is illegal, harmful, hateful or violates some people’s ethical standards. This raises concerns about freedom of speech and personal privacy.

According to Michael Veale, associate professor of technology law at University College London (UCL), AI conversation scanning can be abused for many different purposes, serving managers’ desire for control. and legislators. He is concerned that this feature could create an infrastructure for on-device scanning, leading to excessive tracking and control of users.

The concerns of experts in Europe have a basis in reality. The European Union (EU) is proposing legislation that would require platforms to scan private messages, which critics say would violate democratic rights in the region.

According to this proposal, social networking platforms will be forced to equip content scanning features right on users’ devices to comply with the law. However, this has the potential to lead to mass reporting errors, negatively affecting users.

Currently, it is unclear how Google will handle these concerns. Some experts say Google needs to be more transparent about how its AI conversation scanning works and offer stronger privacy protections for users.

Bài viết liên quan

Palm Mini 2 Ultra: Máy tính bảng mini cho game thủ

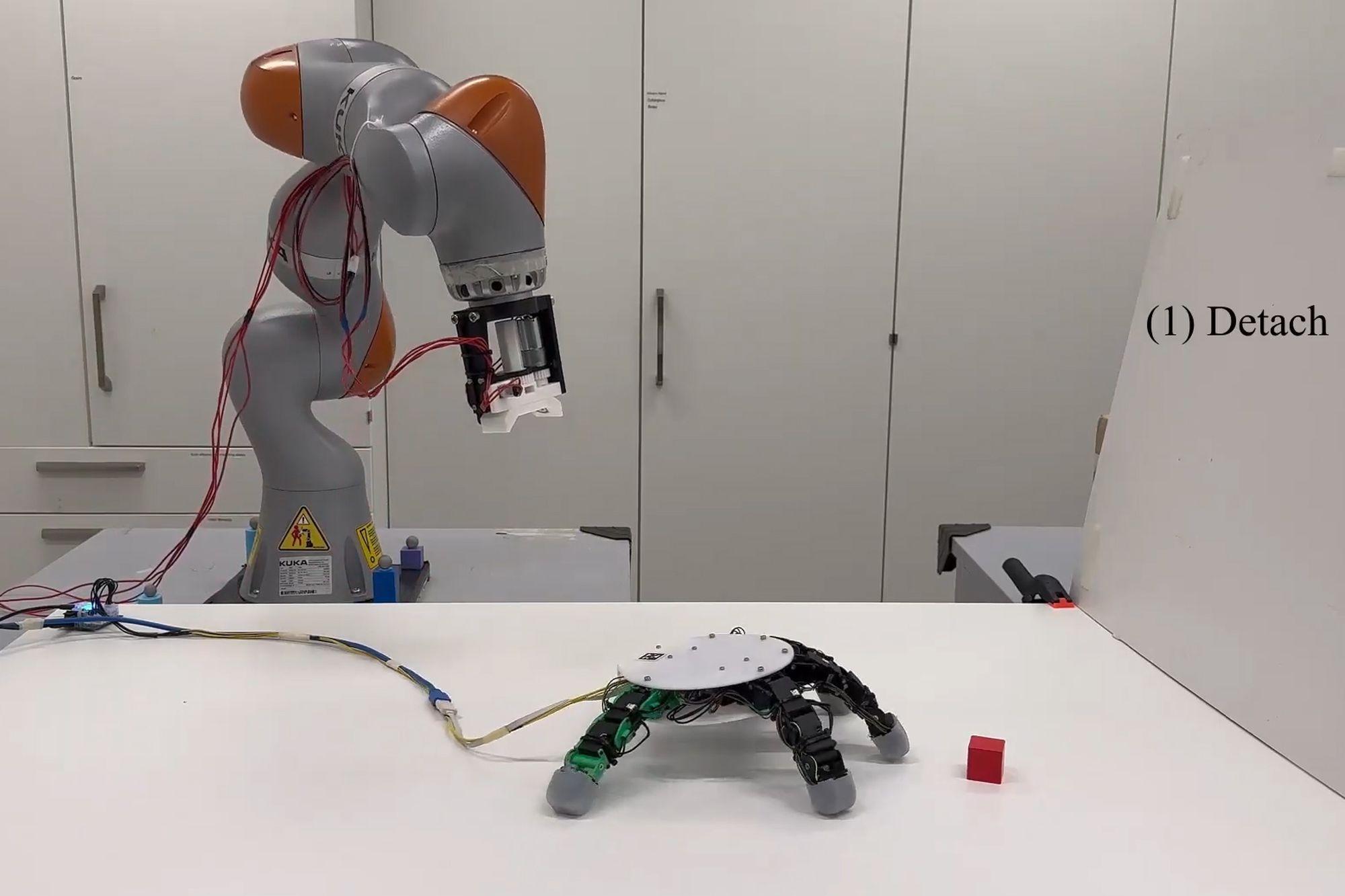

Robot with smart grip

NASA’s goal of conquering the Sun

Apple launches a new feature that makes it easier to use your phone while sitting on vehicle

Google Photos launches smart search feature “Ask for photos”

Roku streams live MLB baseball games for free

Gun detection AI technology company uses Disney to successfully persuade New York

Hackers claim to have collected 49 million Dell customer addresses before the company discovered the breach

Thai food delivery app Line Man Wongnai plans to IPO in Thailand and the US in 2025

Google pioneered the development of the first social networking application for Android

AI outperforms humans in gaming: Altera receives investment from Eric Schmidt

TikTok automatically labels AI content from platforms like DALL·E 3

Dell’s data was hacked, revealing customers’ home address information

Cracking passwords using Brute Force takes more time, but don’t rejoice!

US lawsuit against Apple: What will happen to iPhone and Android?

The UAE will likely help fund OpenAI’s self-produced chips

AI-composed blues music lacks human flair and rhythm

iOS 17: iPhone is safer with anti-theft feature

Samsung launches 2024 OLED TV with the highlight of breakthrough anti-glare technology

REGISTER

TODAY

Sign up to get the inside scoop on today's biggest stories in markets, technology delivered daily.

By clicking “Sign Up”, you accept our Terms of Service and Privacy Policy. You can opt out at any time.

Nhận xét (0)