Controversy over the transparency of the new Safety Committee established by OpenAI

01:34 29/05/2024

3 phút đọc

Artificial intelligence company OpenAI has formed a new committee to oversee “critical” safety and security issues related to the company’s projects and operations. However, the move may upset tech ethics experts because OpenAI chose company insiders – including CEO Sam Altman – to join the committee instead of independent outside experts.

The Safety and Security Committee includes OpenAI board members Bret Taylor, Adam D’Angelo and Nicole Seligman, along with Chief Scientific Officer Jakub Pachocki, Readiness Lead Aleksander Madry, Security Systems Lead all Lilian Weng, Security Lead Matt Knight and Navigation Science Lead John Schulman. According to the company’s blog post, it will be responsible for evaluating OpenAI’s processes and safety measures over the next 90 days. The committee will then share the results and recommendations with the full OpenAI board for consideration. OpenAI said it will publish updates on any recommendations adopted “in a manner consistent with safety and security”.

OpenAI is developing the next cutting-edge artificial intelligence paradigm and believes these systems will take them to the next level of capability on the path towards artificial general intelligence (AGI). While we pride ourselves on building and releasing models that lead the industry in both capacity and safety, OpenAI welcomes robust debate at this critical time.

Over the past few months, OpenAI has seen a number of senior employees leave the safety side of its engineering team – and some of these former employees have voiced concerns about what they see as the company’s attempts to de-prioritisation of AI safety.

AI policy researcher Gretchen Krueger, who recently left OpenAI, agreed with Leike’s statement, calling on the company to improve accountability and transparency and “be more careful in its use of its own technology.” “.

I resigned a few hours before hearing the news about @ilyasut and @janleike, and I made my decision independently. I share their concerns. I also have additional and overlapping concerns.

— Gretchen Krueger (@GretchenMarina) May 22, 2024

Quartz notes that, besides Sutskever, Kokotajlo, Leike, and Krueger, at least five of OpenAI’s top safety-minded employees have quit or been forced out since late last year, including former members OpenAI’s board of directors are Helen Toner and Tasha McCauley. In a post for The Economist published Sunday, Toner and McCauley write that — with Altman at the helm — they do not believe OpenAI can hold itself accountable.

“Based on our experience, we believe that self-governance cannot reliably resist the pressures of profit,” Toner and McCauley said.

TechCrunch reported earlier this month that OpenAI’s Super Navigator team, responsible for developing ways to control “super-intelligent” AI systems, was promised 20% of the company’s computing resources — but rarely get a fraction of that number. The Super Navigation Team has since been disbanded and much of its work has been assigned to Schulman and a safety advisory group that OpenAI formed in December.

While calling for AI regulation, OpenAI also works to shape those regulations by hiring an in-house lobbying team and lobbyists at an ever-expanding number of law firms, and spent hundreds of thousands of dollars on lobbying in the US in the fourth quarter of 2023 alone.

To avoid accusations of moralizing the executive-dominated Safety and Security Committee, OpenAI pledged to hire third-party “safety, security and technical” experts to support its work of the committee, which includes cybersecurity veteran Rob Joyce and former U.S. Department of Justice official John Carlin. However, other than Joyce and Carlin, the company did not disclose details about the size or composition of this group of external experts – nor did it shed light on the limitations of the group’s power and influence over the committee. .

In a post on Advanced technology, not effective in actual monitoring. It’s worth mentioning that OpenAI says it’s looking to address “legitimate criticisms” of its work through the committee — “legitimate criticisms” depending on how people look at it.

OpenAI just created an oversight board that’s filled with its own executives and Altman himself. This is a tried and tested approach to self-regulation in tech that does virtually nothing in the way of actual oversight. https://t.co/X7ay4IijXF

— Parmy Olson (@parmy) May 28, 2024

Altman has promised that outsiders will play an important role in the governance of OpenAI. In a 2016 article in the New Yorker, he said that OpenAI would plan a way to allow most people in the world to elect representatives to a board of directors. That never happened – and it doesn’t look like it will at this point.

Bài viết liên quan

Palm Mini 2 Ultra: Máy tính bảng mini cho game thủ

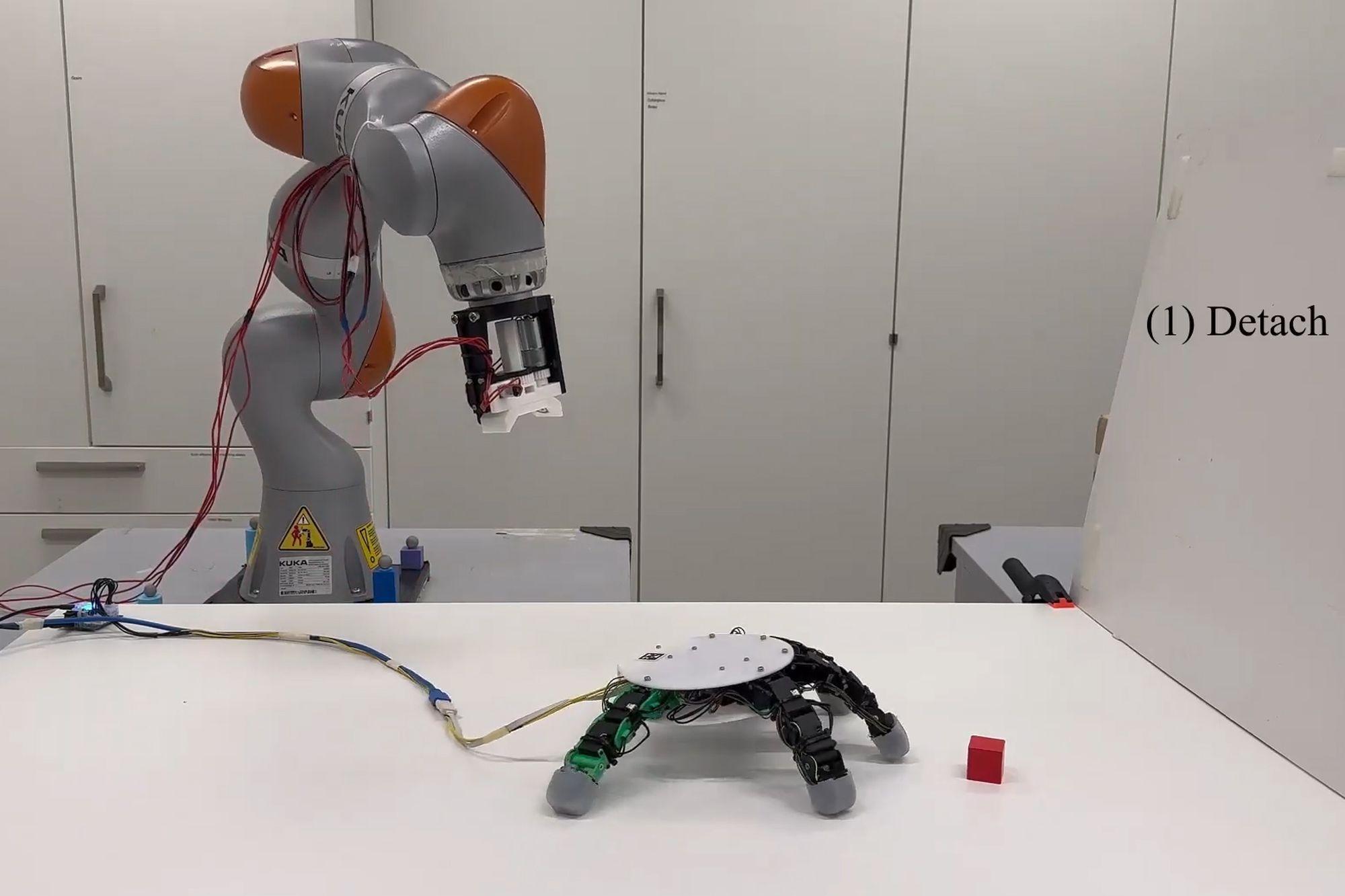

Robot with smart grip

NASA’s goal of conquering the Sun

Apple launches a new feature that makes it easier to use your phone while sitting on vehicle

Google Photos launches smart search feature “Ask for photos”

Roku streams live MLB baseball games for free

Gun detection AI technology company uses Disney to successfully persuade New York

Hackers claim to have collected 49 million Dell customer addresses before the company discovered the breach

Thai food delivery app Line Man Wongnai plans to IPO in Thailand and the US in 2025

Google pioneered the development of the first social networking application for Android

AI outperforms humans in gaming: Altera receives investment from Eric Schmidt

TikTok automatically labels AI content from platforms like DALL·E 3

Dell’s data was hacked, revealing customers’ home address information

Cracking passwords using Brute Force takes more time, but don’t rejoice!

US lawsuit against Apple: What will happen to iPhone and Android?

The UAE will likely help fund OpenAI’s self-produced chips

AI-composed blues music lacks human flair and rhythm

iOS 17: iPhone is safer with anti-theft feature

Samsung launches 2024 OLED TV with the highlight of breakthrough anti-glare technology

REGISTER

TODAY

Sign up to get the inside scoop on today's biggest stories in markets, technology delivered daily.

By clicking “Sign Up”, you accept our Terms of Service and Privacy Policy. You can opt out at any time.

Nhận xét (0)