Apple’s new feature opens up the future of smart glasses

12:29 16/09/2024

3 phút đọc

The iPhone 16 launch event brought us a very interesting feature: ‘Visual Intelligence’. With this feature, you can turn your iPhone into a ‘mobile information library’. Just point the camera at a dog, a poster or anything you want to know, and your iPhone will automatically search and provide you with relevant information.

This feature is convenient when used with the new camera button on the iPhone. But more importantly, it is a stepping stone for more advanced technologies in the future. For example, augmented reality (AR) glasses, Apple will need tools like this to develop.

With Visual Intelligence, exploring the world around you becomes more fun than ever. Now you can interact directly with everything you see. For example, you are walking around and come across a fancy restaurant. Just look at it and ask the glasses a question, and you will get the necessary information. This is the experience that Apple introduced with the Visual Intelligence feature on iPhone.

Meta has shown us clearly that smart glasses combined with AI virtual assistants can become an extremely useful tool in recognizing surrounding objects. From Meta’s success, it is not difficult to imagine Apple creating a similar product but completed at a much higher level. What is special is that Apple can connect these glasses to all your applications and personal information on the iPhone, making using the image recognition feature more convenient than ever.

Apple already has the Vision Pro, a device that can support cameras. However, because the Vision Pro is quite bulky, few people wear it outside. Moreover, what we have at home is usually enough to do many things. Therefore, there have been many rumors that Apple is developing a more compact AR glasses, similar to regular eyeglasses. This is the ultimate goal that Apple is aiming for this technology.

In fact, Apple’s AR glasses may be a long way off. According to Bloomberg’s Mark Gurman , while there are reports that the product will launch in 2027, experts inside Apple believe the technology is not mature enough to bring the product to users in the next few years.

Although there is no official information, it is clear that Apple is investing a lot in developing software for future wearable devices. The Visual Intelligence feature could be a strategic move, helping Apple create unique and smarter experiences on its smart glasses products. Starting now will give Apple enough time to research and improve this technology before launching it to the market.

This approach isn’t new to Apple. The company has been integrating augmented reality (AR) technology into the iPhone for years before the Vision Pro. While the Vision Pro is more of a VR device than an AR device, it’s clearly a precursor to what could eventually become AR glasses. Along with hardware upgrades, Apple could also continue to refine software features like Visual Intelligence that are already on the iPhone. And when they’re ready, they’ll combine all the best ideas into a single glasses-like product.

Smart glasses could be the next big thing in technology, with big names like Meta and Snap working hard on AR glasses. Google has already shown off prototypes, and Qualcomm is working with Samsung and Google on mixed reality glasses. If Apple were to enter the game with its own smart glasses, Visual Intelligence could be a significant competitive advantage. But first, we need to see Apple do it well on its own iPhones.

Từ khoá:

Bài viết liên quan

Palm Mini 2 Ultra: Máy tính bảng mini cho game thủ

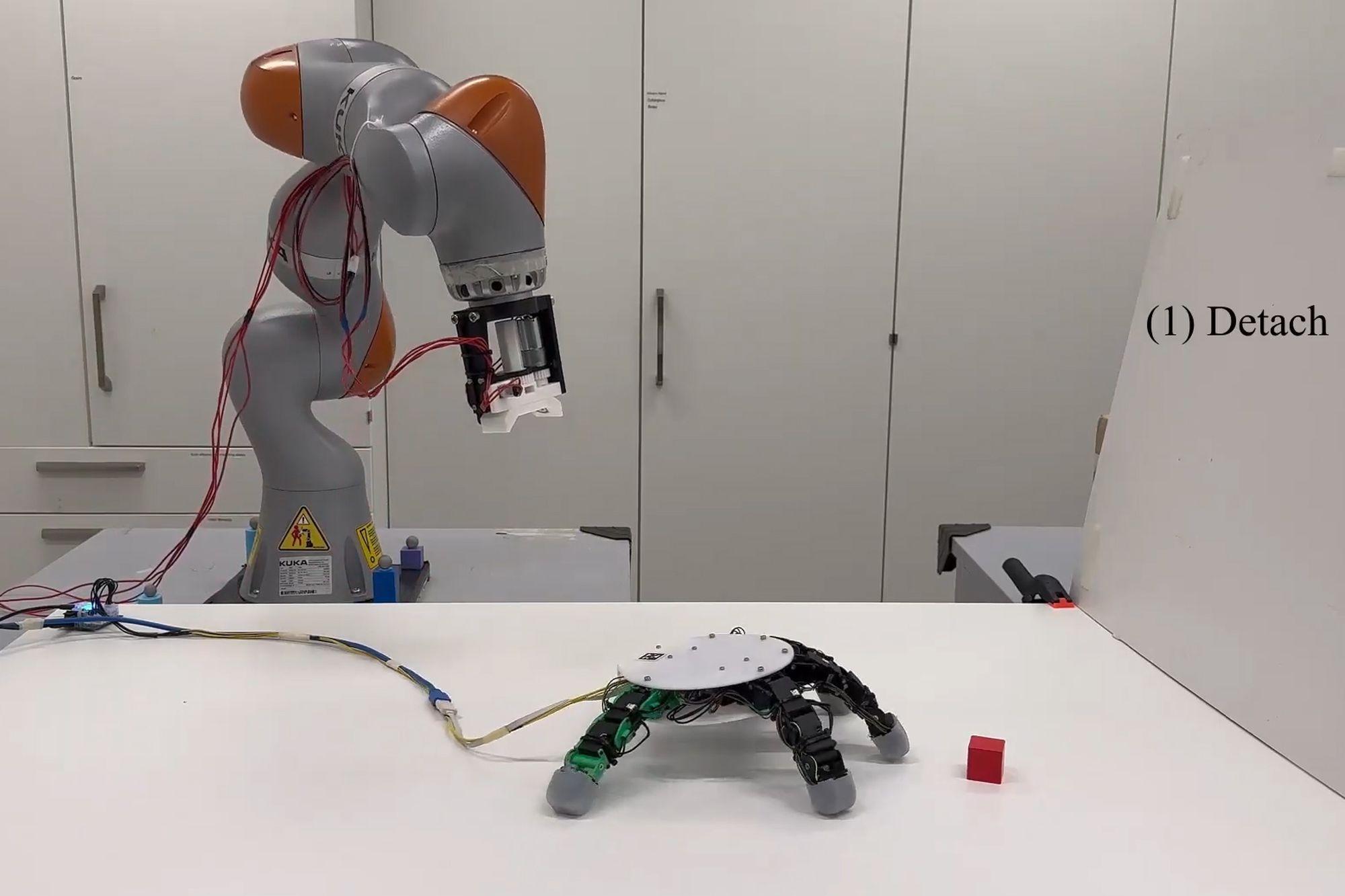

Robot with smart grip

NASA’s goal of conquering the Sun

Apple launches a new feature that makes it easier to use your phone while sitting on vehicle

Google Photos launches smart search feature “Ask for photos”

Roku streams live MLB baseball games for free

Gun detection AI technology company uses Disney to successfully persuade New York

Hackers claim to have collected 49 million Dell customer addresses before the company discovered the breach

Thai food delivery app Line Man Wongnai plans to IPO in Thailand and the US in 2025

Google pioneered the development of the first social networking application for Android

AI outperforms humans in gaming: Altera receives investment from Eric Schmidt

TikTok automatically labels AI content from platforms like DALL·E 3

Dell’s data was hacked, revealing customers’ home address information

Cracking passwords using Brute Force takes more time, but don’t rejoice!

US lawsuit against Apple: What will happen to iPhone and Android?

The UAE will likely help fund OpenAI’s self-produced chips

AI-composed blues music lacks human flair and rhythm

iOS 17: iPhone is safer with anti-theft feature

Samsung launches 2024 OLED TV with the highlight of breakthrough anti-glare technology

REGISTER

TODAY

Sign up to get the inside scoop on today's biggest stories in markets, technology delivered daily.

By clicking “Sign Up”, you accept our Terms of Service and Privacy Policy. You can opt out at any time.

Nhận xét (0)